4 robotic voice technologies explained

Take your vocals to outer space with these creative technologicies and techniques

Robochat

Although there's nothing in music quite like the purity and emotional pull of a beautifully recorded human voice, electronic producers realised long ago that the larynx can also be used as the source of all manner of sci-fi-style vocal treatments.

Indeed, the unreal sounds of the vocoder, Talk Box, Auto-Tune and speech synthesis have played a significant part in shaping the landscape of dance, electronic and pop music in the 20th and 21st centuries, and in this gallery, we'll get you up to speed on all four of them.

There's plenty more vocal production action to be found in Computer Music's The Creative Guide to Vocals feature - get it now in the August edition (CM233).

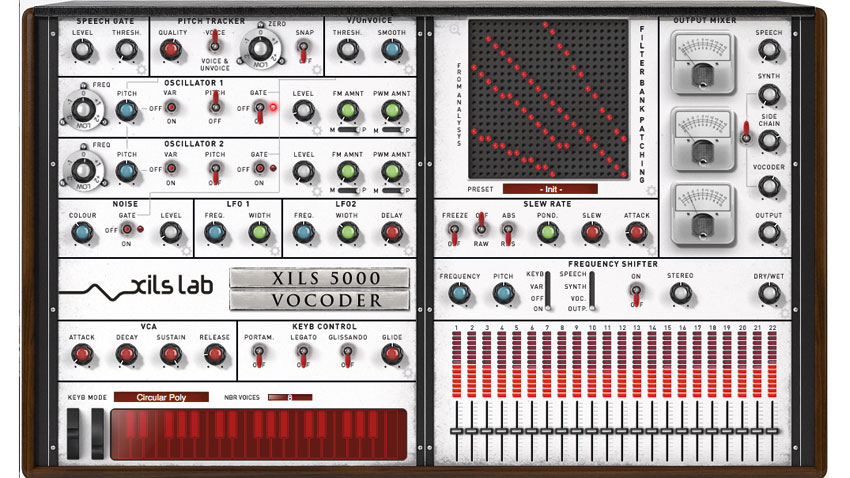

1. Vocoder

Use in music

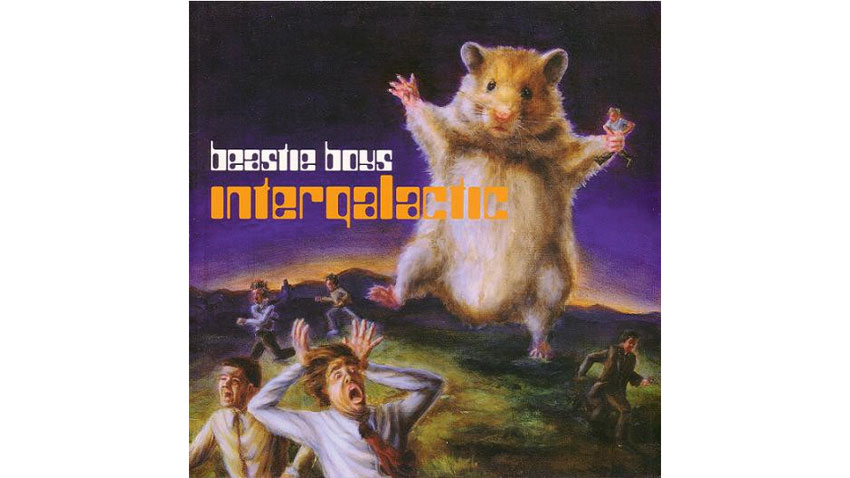

A vocoder can provide a focal point such as in the Beastie Boys’ Intergalactic, add attitude in tracks like Freestyle’s Don’t Stop The Rock, or just put a mild spin of weirdness on an otherwise dry vocal, as in Laurie Anderson’s O Superman.

While not taking the lead vocal role quite as often in recent times, vocoders are still firmly in the vocabulary of today’s music - check out Ariana Grande’s vocoder-backed acapella performance of her track Dangerous Woman at the Billboard 2016 awards for a current example.

How it works

A modulator signal (speech) and a carrier (a synth sound, ideally with plenty of harmonics) are both split into a number of frequency bands, and envelope followers on the modulator bands are used to control the level of corresponding carrier bands, which we hear. So you could either say that the vocoder makes your synth sounds sing, or that it gives your voice the timbre of a synthesiser.

The number of bands varies from vocoder to vocoder, but can range from single digits to three figures. The type of filters used also affects the outcome, and some vocoders may also offer options to map the modulator and carrier filter frequencies differently, for more unusual effects. Another common vocoder feature is to allow some of the modulator (voice) signal to pass through, usually high-passed so we hear just the top-end elements such as sibilance, to aid intelligibility.

Get the sound

There are lots of vocoder plugins out there, and an exhaustive list would be rather long. Off the top of our heads, though, a few we reckon you should try are mda vocoder (free), TAL-Vocoder (free), MeldaProduction MVocoder, Ableton Live’s built-in Vocoder, and Image-Line’s Vocodex (available as a Windows VST and free with most versions of FL Studio 12).

Once you’ve got your vocoder fired up, there’s a few approaches to the modulator (voice) and carrier (synth). For a classic grungy, monotone robot voice, use a deep, single-note synth patch playing a bright patch with plenty of harmonics - try overdriving it to add more if needed. Mixing in noise can aid vocal intelligibility. If a soft, talking pad is more your bag, dial in a fairly bright pad sound and lay down your chords. As for the source voice, try brightening it with EQ or distortion, and controlling dynamics with compression or limiting for a more consistent output.

2. Auto-Tune

Use in music

Cher’s 1998 track Believe was intended to repackage the decade-spanning diva for a new audience. Arguably crucial to its chart-wrecking worldwide success were the novel vocal effects that had never been heard before.

The track’s producers weren’t giving anything away about how it was achieved, but as we now know, they used Antares Auto-Tune, a piece of software designed for invisible correction of off-key notes, but here with the settings pushed to extremes, instantly snapping to ‘correct’ notes, turning glissandos and pitch variations into runs of specific notes.

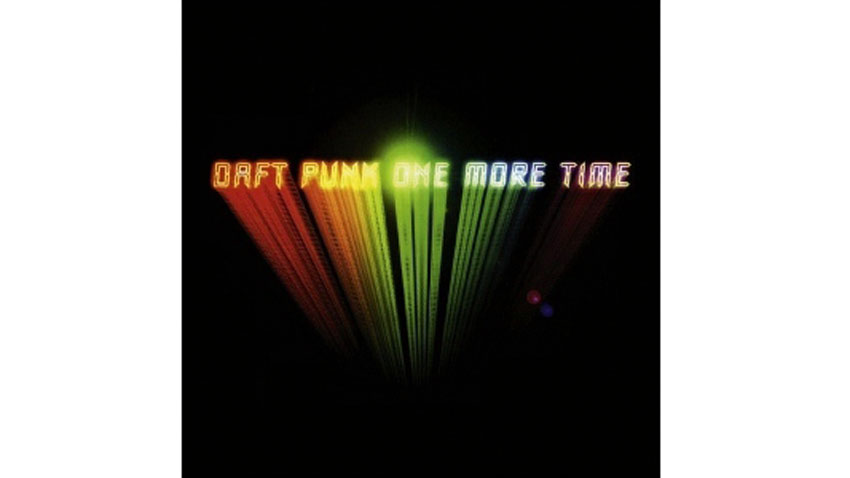

Robo-lovers Daft Punk put out the next major hit to feature the effect. One More Time featured house singer Romanthony’s vocals slathered in a rich coat of Auto-Tune. From there, Antares' amazing plugin became a common vocal treatment, particularly in pop and R&B production, with self-styled “rappa ternt sanga” T-Pain its most infamous (ab)user, with his vocal constantly processed, as on Buy U A Drank. Claiming to use Auto-Tune to give him a distinctive vocal sound, you might assume it also helped conceal a terribly dodgy singing voice - but you’d be wrong! Here's the man sans Auto-Tune.

How it works

Auto-Tune works by analysing a waveform and essentially ‘cutting out’ individual cycles. These snippets are then replayed at the desired correct frequency - a bit like how we quantise parts in MIDI to an exact timing - to give a retuned vocal with the timbre of the original. The timbre itself can be adjusted by modifying individual wave cycles then replaying them at the required rate. Stretching or contracting the wave cycles shifts their formants and can give an impression of altered gender, for example.

Get the sound

Obviously, Antares Auto-Tune will give you the classic auto-tuned effect, but there are plenty of other options for achieving it, such as Celemony Melodyne, Waves Tune, iZotope Nectar, and even the free MAutoPitch from MeldaProduction. As far as settings go, the main thing you need to do is select a key that fits your song, then turn the retuning speed right up.

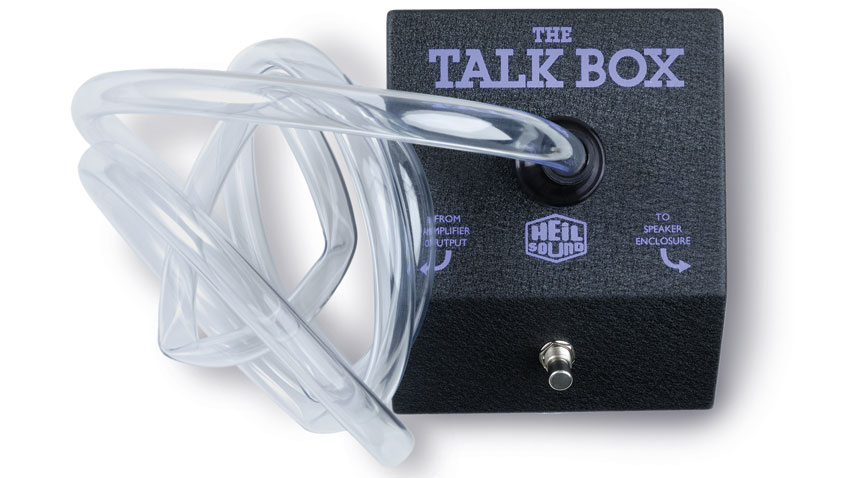

3. Talkbox

Use in music

The talkbox came to the fore in the 70s, with Peter Frampton popularising it in rock, giving his guitar a talking, vowel-like timbre. Other performers, meanwhile, used synths and electric pianos as their sound

source; for example Stevie Wonder.

The most virtuosic and downright funkiest talkboxer to have lived, though, has to be Zapp’s Roger Troutman. On their 1980 track More Bounce To The Ounce, you’ll hear clearly speaking synth lines with vibrato in all the right places, and harmonised parts created by multitracking and stacking them up as you would real vocal harmonies (as opposed to just playing chords on the synth). Dr Dre would later call on Roger to perform on 2Pac’s 90s hip-hop anthem California Love. The talkbox also features on Daft Punk’s Harder Better Faster Stronger (check out this amazing cover) and a selection of Chromeo tracks.

How it works

Often mistaken for a vocoder or Auto-Tune, the talkbox actually works via mechanical means rather than electronics or DSP. A speaker in an airtight box produces the sound to be transformed, which is then fed through a length of rubber tube shoved into the performer’s mouth. The performer shapes their mouth to imprint their own vocal formants on the source signal, and a microphone captures the results. For intelligible speech, the user must make consonant sounds in the usual way. A good talkbox performance is not easy to pull off!

Get the sound

Options for recreating talkbox tones in software are few, but you can try iZotope VocalSynth, Antares Articulator, and the free mda talkbox. You can use these with any sound source, but Roger Troutman famously used a Yamaha DX100 FM synth as his. If you’ve got NI’s FM8, you can load DX100 patches into it, and the Hard Brass, FM Sawtooth and FM Pulse presets make fine starting points. Get them from synthzone.com - the DX100 libraries are found in the ‘Yamaha Related Filed’ section near the bottom.

4. Voice synthesis

Use in music

Way back in the 30s, Homer Dudley invented the VODER (Voice Operation DEmonstratoR). In the hands of a skilled operator, this electronic device could produce understandable results. White-coated boffins continued to experiment, and by 1961, Bell Labs had coaxed an IBM 704 computer into singing Daisy Bell.

In the mid 70s, speech synthesis appeared in consumer devices, and the 1976 Speech+ speaking calculator used the first ever speech chip, the TSI S14001A.

It was Texas Instruments’ 1978 Speak & Spell that captured the imagination of the public, though, thrusting computer speech into the mainstream. At the time, few had heard an electronic device produce speech on its own, but now you could actually own one! Speak & Spell used ‘Linear Predictive Coding’ (LPC) to reproduce recognisable speech with meagre resources.

As for synthetic speech in music, Kraftwerk were quick on the uptake, with several examples on their 1981 album Computer World, such as Numbers. More recently, good-quality synthesised singing has become viable, and insanely popular in Japan through Yamaha’s Vocaloid series.

How it works

LPC-based synthesis uses an oscillator to model the output of the larynx, noise to represent sibilant sounds, then filters to recreate the formants of a human voice. Another method is concatenation synthesis, where sampled sections of real spoken vocals are strung together to reproduce the desired words. Or how about using additive synthesis to build a voice from scratch using sine waves? There are many voice synthesis methods, in fact, each with its own pros and cons.

Get the sound

To transform your own voice into a computerised one, try Sonic Charge’s Bitspeek or iZotope’s VocalSynth in Compuspeech mode. These break a voice down in real time, then reconstruct it, LPC-style. To create speech and singing from nothing, try Plogue’s chipspeech, which emulates retro voice chips (also check out the free Alter/Ego, which offers a more realistic, modern sound), Yamaha’s Vocaloid, and VirSyn’s Cantor.

Computer Music magazine is the world’s best selling publication dedicated solely to making great music with your Mac or PC computer. Each issue it brings its lucky readers the best in cutting-edge tutorials, need-to-know, expert software reviews and even all the tools you actually need to make great music today, courtesy of our legendary CM Plugin Suite.