What is machine learning, and what does it mean for music?

AI is taking over our lives - and, it seems, our music-making. Let’s plug into The Matrix and go deeper…

As the name implies, machine learning is a form of AI whereby a computer algorithm analyses and stores data over time, then uses this data to make decisions and predict future outcomes. Deep learning is the next evolution of this: instead of requiring human ‘supervision’, algorithms can autonomously use ‘neural networks’ analogous to the human brain. Put simply, lines of computer code can now, to some extent, be programmed to learn for themselves, then use those learnings to perform complex operations on a scale that far surpasses human abilities.

Considered the single biggest advancement in software development over the past few years, this technology is possible thanks to revolutionary advancements in computing power and data storage, and is now an integral part of day-to-day life, like how Siri or Alexa intelligently store data to predict future actions. Ever wondered why Facebook’s ‘People You May Know’ and those pesky suggested ads on social media are always so accurate? Spooky, huh? That’s before we even mention face recognition software, email spam filtering, image classification, fraud detection…

Yep, machine learning algorithms are everywhere, and the field of music is no exception. For us everyday music listeners here in 2019, streaming services’ algorithms drive those lists of suggestions that help you hunt down new songs and artists you’d never normally discover. Last year, Google’s Magenta research division developed the open-source NSynth Super, a synthesiser powered by their NSynth algorithm designed to create entirely new sounds by learning the acoustic qualities of existing ones.

Computer-assisted composition, meanwhile, has been around since Brian Eno’s Koan-powered Generative Music 1 was released on floppy disc back in 1990. Amper Music takes this concept into the 21st century: it’s a service that uses deep learning to automatically compose computer-generated music for a piece of media based on the user’s choice of ‘style’ or mood’. Content creator Taryn Southern famously composed an entire track with the AI-powered assistance of Amper Music, and it’s since amassed almost 2 million plays on YouTube.

Furthermore, this tech is being used to give music producers and performers a helping hand. Audionamix’ Xtrax Stems 2 uses cloud-based machine-learning assistance to deconstruct a fully-mixed stereo track into a trio of constituent sub-stems (vocal, drums and music) that can then be used for live remixes and DJ mashups.

Whether you think the machines will take over our studios or not, it’s clear that artificial intelligence technology is here to stay, and we’re witnessing the beginning of a music tech revolution.

Do robots have musical dreams?

Software maker iZotope has used machine-learning tech on new releases of Ozone, Neutron and Nectar. Its CTO, Jonathan Bailey, fills us in…

Get the MusicRadar Newsletter

Want all the hottest music and gear news, reviews, deals, features and more, direct to your inbox? Sign up here.

The terms ‘machine learning’ and ‘deep learning’ are used a lot these days. What exactly do they mean, in layman’s terms?

“Machine learning refers to specific techniques within the broader field of AI that allow a system to find patterns within large amounts of data or to make a decision in response to previously unseen data. A common example is the facial recognition technology. The software on your phone has obviously never seen your photos before – because they didn’t exist before you took them – and yet it can identify (‘classify’) faces and group (‘cluster’) them.

“Machine learning techniques have been around for decades, largely centered around the use of neural networks. Neural networks are connected, statistical models that are inspired by the way the neurons in your brain function as a system of connected nodes.

“Over the past ten years, two forces have combined to allow for breakthroughs in the use of machine learning techniques: the explosion of digital data, and the cheap availability of computing resources (due to cloud computing solutions such as Amazon Web Services). This is where deep learning comes in. Deep learning refers to the use of highly complex neural network models that use several layers of nodes, connected in complicated configurations that require powerful computers to train – on large data sets – and operate.”

Photography was supposed to kill painting. It didn’t. I have faith in our ability to invent new ideas.

How does machine/deep learning help improve software tools for musicians and audio professionals?

“iZotope has invested heavily in these techniques over the past few years. One example from Neutron, our intelligent channel strip, uses deep learning to identify (‘classify’) which instrument is represented by the audio in any given track in your music session, and based on that categorisation, and some additional acoustical traits we analyse within the audio, we make a recommendation for which dynamics, EQ and/or exciter settings to apply to prepare that track for your mix.

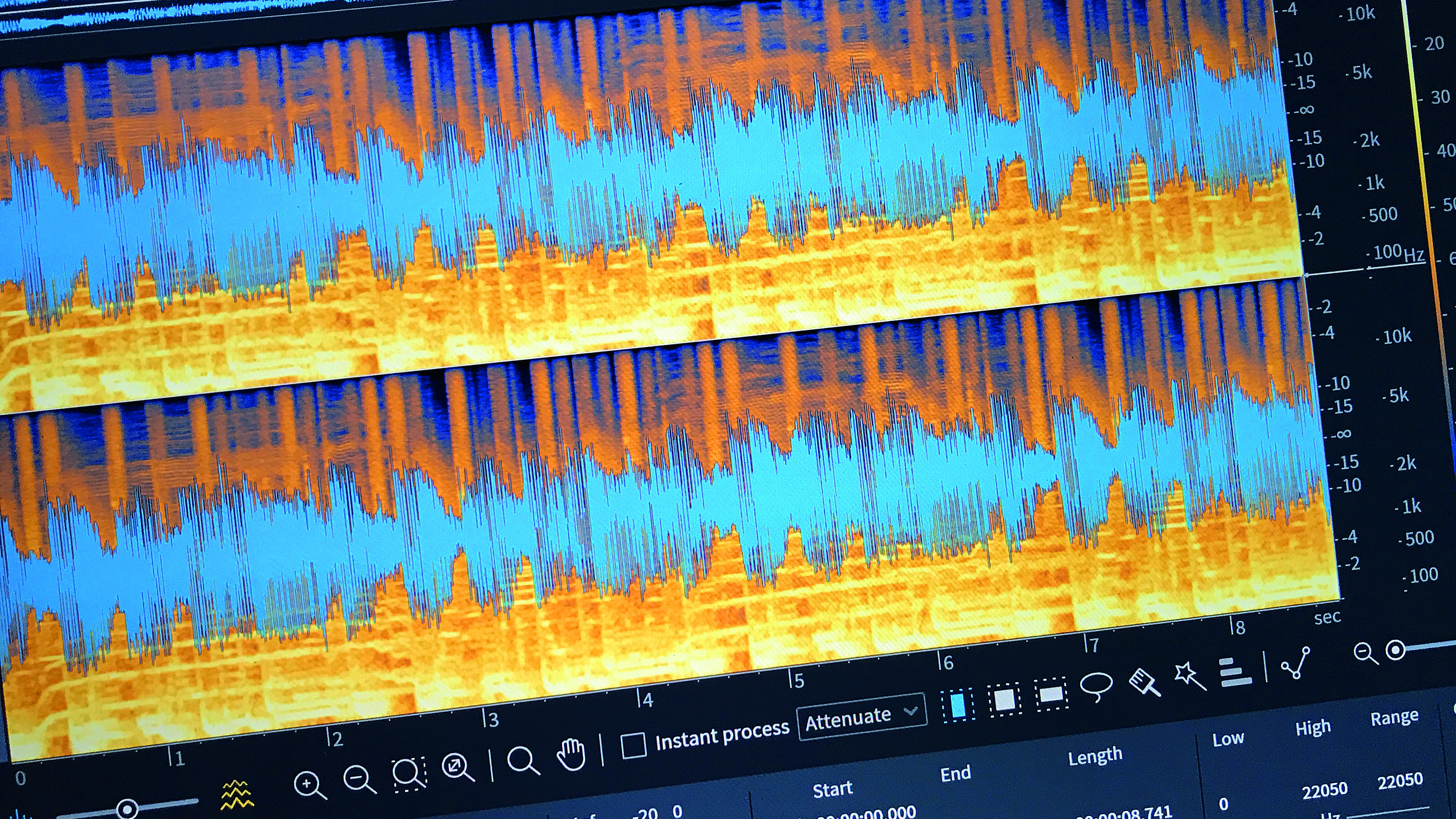

“We’re now using deep learning to not only analyse audio content but also process it. In our recent release of RX 7, the Music Rebalance feature uses deep learning to ‘unmix’ a musical mixture into individual stems that can be rebalanced or otherwise processed separately. We’re exploring how deep learning might be used to synthesise content in the future.”

What are the big pros and cons?

“Deep learning has solved some problems we struggled to solve in the past. For example, many of our customers asked us for a way to remove lavalier microphone rustle noise from recordings, which was tough to solve even using our powerful spectral analysis and processing technology.

“For companies interested in developing this capability, using techniques from deep learning is getting easier, but it’s still not that easy. One of the main challenges in implementing a working deep learning solution is having access to good training data. This is kind of a new territory for companies that have traditionally focused on algorithm development. The software you use to create a neural network is freely available, commodity technology (Google TensorFlow is a common example). As I say, for companies of a certain size, access to large amount of computing power is reasonably affordable. Data has become a big bottleneck and poses an interesting problem. Google gives their software away, and charges pennies for their cloud computing service, but they closely guard their data.

“That said, deep learning is not a panacea. We still rely heavily on knowledge that come from the canon of digital signal processing. Learning how to effectively use and train a deep neural network is getting easier, but the cutting-edge research is still done by highly skilled scientists (usually with PhDs). Neural networks can be very difficult to debug and sometimes they function as a kind of ‘black box’ – you don’t totally know what’s going on inside. They are also computationally and resource intensive, so that makes engineering them to work in certain real-time applications – such as synthesisers or audio plugins – very challenging.

“Deep learning makes for an exciting story, but ultimately, we want the magic to be in the result a customer gets, not how she got there.”

How can musicians harness this technology while retaining creativity?

“There are a couple of different research camps. One from the world of musicology, focused on algorithmic musical composition. In this space you have Amper Music, who have a product that can create generative music examples for your content, like your YouTube video or ad. Others focus on applications like auto-accompaniment. So some groups are trying to automate creativity, and others are trying to enhance it.

“This is a really delicate balance but iZotope is firmly in the camp of enhancing creativity. I greatly admire research teams like Google Magenta, whose stated purpose is to use machine learning to create art – but that’s not iZotope’s philosophy or strategy. We want to use deep learning to help you create your art. We are currently more focused on technical applications, but I do see us pushing into more creative domains as long as we stay true to our purpose of enabling creativity. We’re not out to replace human creativity.”

So will software end up writing and mixing our music for us?

“In some cases, it already is. If you’re a great singer-songwriter but you’ve never opened up a DAW in your life, deep learning will be able to help you get a great-sounding recording without having to learn what a compressor is. If you work in a DAW all day, it will learn what effects you like and don’t like, what visual and auditory information you need to get your work done, and allow you to focus on the music itself.

“Photography was supposed to kill painting. It didn’t. I have faith in our ability to invent new ideas.”

Future Music is the number one magazine for today's producers. Packed with technique and technology we'll help you make great new music. All-access artist interviews, in-depth gear reviews, essential production tutorials and much more. Every marvellous monthly edition features reliable reviews of the latest and greatest hardware and software technology and techniques, unparalleled advice, in-depth interviews, sensational free samples and so much more to improve the experience and outcome of your music-making.