A short history of AI in music production

Not too long ago, the very idea of computer-driven musical decision-making seemed like sci-fi. So, how did AI grow from the giddy witterings of futurists to an everyday norm?

While many manufacturers and tech companies enthusiastically point to their AI-driven wares as an indicator of their cutting edge status, explorations into computer-assisted music-making have been developing since at least the 1950s.

It should be made clear at the outset that the term ‘AI’ when applied to music-making encompasses a few rather different things. There’s the development of so-called ‘smart’ algorithms that we now commonly encounter in mixing or audio editing plugins.

Many of these actually lean on a wealth of human experience to apply frequency-correction or normalisation presets. Elsewhere, there are those bona fide virtual brains, such as the ones driving Sonible’s stable of exemplary AI plugins, or mastering platform LANDR’s ‘Synapse’. These take a look at the specifics of your audio, and determine the best course of action, based on their particular field of expertise.

Play it again, RAM

Beyond music production, there’s the arguably separate universe of composition where AI has made a major dent. There’s been notable growth in the number of music-generating services that are able to knock out bewilderingly beautiful music, without breaking a bead of sweat. It’s all based on an abundance of theory know-how, coupled with the ability to draw from a hub of pre-existing music.

A leading example is Aiva, a subscription-based music engine that relies on deep learning algorithms to improve its ability to make dazzling music, and is able to be tailored to a huge range of genres and styles. Aiva’s virtual brain is founded on a pattern-forming model of how a human brain works – with a neural network that recalls its past experiences, and prior problem solving, to continually refine its results. This is often known as ‘Reinforcement’ learning, whereby the AI maneouvres its way around huge amounts of data (such as an archive of classical music, in Aiva’s case).

This brain is able to recognise patterns and commonalities, such as chord structure, melody construction and typical arrangement choices. Armed with this knowledge, it’s then able to create a similarly-structured variant. In 2022, these AI-generated tracks are increasingly indiscernible from music that was composed by human beings.

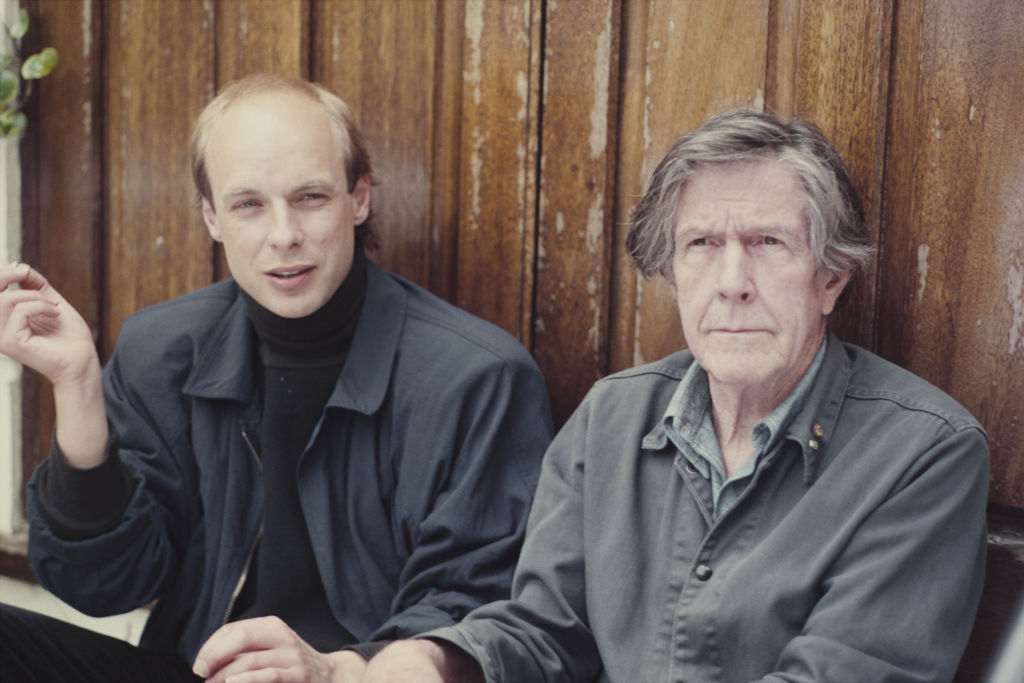

The idea of a machine that is able to spawn music stems back decades, with a major first step being the programming of the Illiac Suite (String Quartet No.4) by university professors Lejaren Hiller and Leonard Issacson. This initial footstep would eventually lead to David Cope’s 1997’s ‘Experiments in Musical Intelligence’ (EMI) program. This sought to initially analyse the composer’s original scores and throw out some new variations based on them to aid his productivity.

Get the MusicRadar Newsletter

Want all the hottest music and gear news, reviews, deals, features and more, direct to your inbox? Sign up here.

Before long the concept of the EMI software was able to astoundingly replicate the intricacies of classic composers. “When I first started working with Bach and other composers I did it for only one reason – to refine and help me understand what style was. No other reason,” Cope reflected. Despite his original singular aim, the new data-driven paradigm of virtual music-making had been established.

Hi, robot

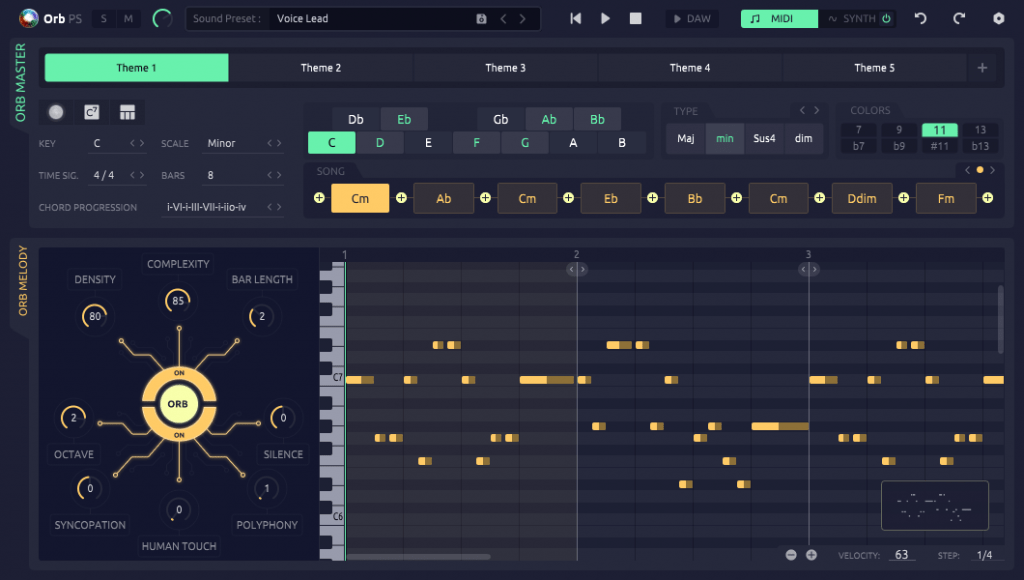

While the rise of generative music platforms that can conveniently create ready-to-go music has been predictably controversial, particularly among soundtrack-composers, the concurrent appearance of machine-learning tools that work with the composer and producer has been more warmly embraced. Tools such as Orb Plugins Producer Suite 3, seek to aid composition by constructing fresh melodies, chord sequences, keys, arpeggios and synth sounds that can significantly enhance a project.

Rather than handing off all duties to the AI, the user is invited to scale the parameters that its digital brain works with. This AI will habitually re-model itself based on the types of arrangements that you’re concocting, serving up new deviations that, in Orb’s case, intend to enhance how expressive and cohesive the resulting composition is.

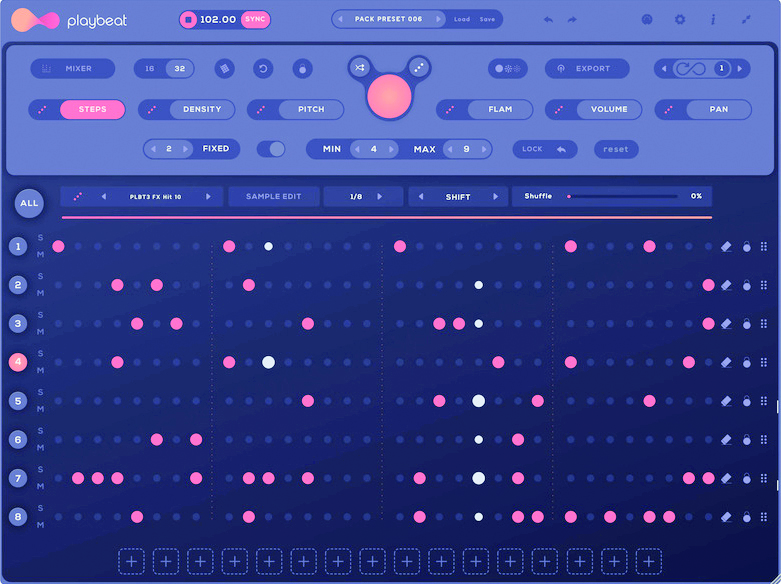

While music theory is one such aspect of track-building that AI can undoubtedly smoothen, beat-making is also well within its purview. One such plugin is Audiomodern’s Playbeat 3, a fantastic beat-making toolkit, complete with an incredibly manipulable step sequencer. Playbeat’s smart algorithms work concurrently to either build on, or totally remix, your rhythmic designs at an incredibly fast rate. This type of virtual pro suggestion-maker represents AI at perhaps its most constructively human-like. Although the thought of Playbeat’s (and similar software’s) ‘always watching’ learning algorithms monitoring your every move is perhaps a little unsettling to old-school sci-fi aficionados.

What are the chances?

One of the principal benefits of AI in the compositional domain is its ability to conjure up new ideas beyond the staid ruts we all find ourselves getting into as the years go by. The imperative to shake up standard creative pathways – exemplified by chord-sequence suggesting software like Amadeus Code and the intricate arrangement-shaping of Orb Composer – long predates the advent of AI.

Way back in the early 1950s, experimental composer John Cage created his extraordinary Music of Changes by marking passages from the ancient numerical generator, the I Ching, and applying its complex symbol system to musical elements like tempo, sound density, dynamics and a whole lot more.

Skip forward to the 1970s, and ambient titan Brian Eno used the much more to-the-point ‘Oblique Strategy’ cards, each one of which would contain a written direction, which could be interpreted by himself or other musicians in interesting ways. The intent was to guide them away from their comfort zone.

Examples included “Honour thy error as a hidden intention” and “Use an old idea”. Both Cage and Eno’s forays into externalising decision-making laid the foundations for the ways in which artificial intelligence would be trusted to guide contemporary music-makers. Though not computer-based, these were forerunners to many of the principles of today’s AI compositional choice-makers.

Die, robot

While at MusicRadar, we’re determined to emphasise the good that AI and smart algorithms can bring to the table, there are still many who baulk at the idea of a future wherein a multitude of mechanical minds sculpt and pen our tracks. While those music-generating platforms face considerable contempt from some, others direct their ire at the automation of smart algorithms.

Mastering platforms come under particular fire. While the continual advancement of the algorithmically-driven platforms such as LANDR and eMastered leads them to get smarter, the companies themselves offer an olive branch to traditional jobbing mastering engineers. As eMastered’s Colin McLoughlin told The Verge in an interview, “For the absolute best mastering, a traditional mastering engineer will always be the

ultimate option.”

Ode to code

As these myriad AI algorithms continue to learn, and more thoroughly permeate across every facet of music-making, it’s likely that machine-assisted workflows will become increasingly normalised over the next few decades. While there are legitimate conversations to be had about the impact of generative music platforms on professional composers, the application of AI-assisted algorithms can undoubtedly be a major benefit to all.

Over the coming days and weeks on MusicRadar, we'll be delving much deeper into some of the finest AI-driven software, platforms and apps currently available, and illustrate just how and why AI’s suffusion throughout the world of music technology has become a force for so much time-saving and idea-aiding good.

6 innovations in music AI

1. Illiac 1 (1956)

The unveiling of Lejaren Hiller’s Illiac Suite was a foundational moment in AI development. The computer that composed the piece – the ILLIAC 1 – was the very first ‘supercomputer’ to be used by an academic institution back in 1956. It composed the suite by organising an array of algorithmic choices, provided by Hiller. While baffling to many at the time, the work of this computer is now regarded as astonishingly innovative.

2. David Bowie's Verbasizer (1995)

An artist always ahead of the curve, David Bowie decided to modernise his ‘cut-up’ lyric writing technique in the 1990s. By 1995, many of Bowie’s lyrics were generated in tandem with this simple lyric-shuffling software. While not ‘smart’ per se, Bowie’s openness to creatively collaborating with technology while writing offered a tantalising glimpse of the future.

3. EMI (1997)

David Cope’s Experiments in Musical Intelligence program was initially conceived to offer new creative pathways when inspiration was lacking, but what Cope actually had stumbled upon was the means to emulate the style of any artist whose work was fed into the software. Cleverly recognising patterns, the software was then able to generate new compositions that mirrored the style. Computer generated music was being born.

4. Continuator (2012)

First devised in 2001, the Continuator was a machine learning piece of software. It gathered data on how musicians played, and learned to smartly complement them by improvising along in real-time using the data gathered on how they played as its template. Its inventor, Francois Patchet, would go on to be a big player in Spotify’s development.

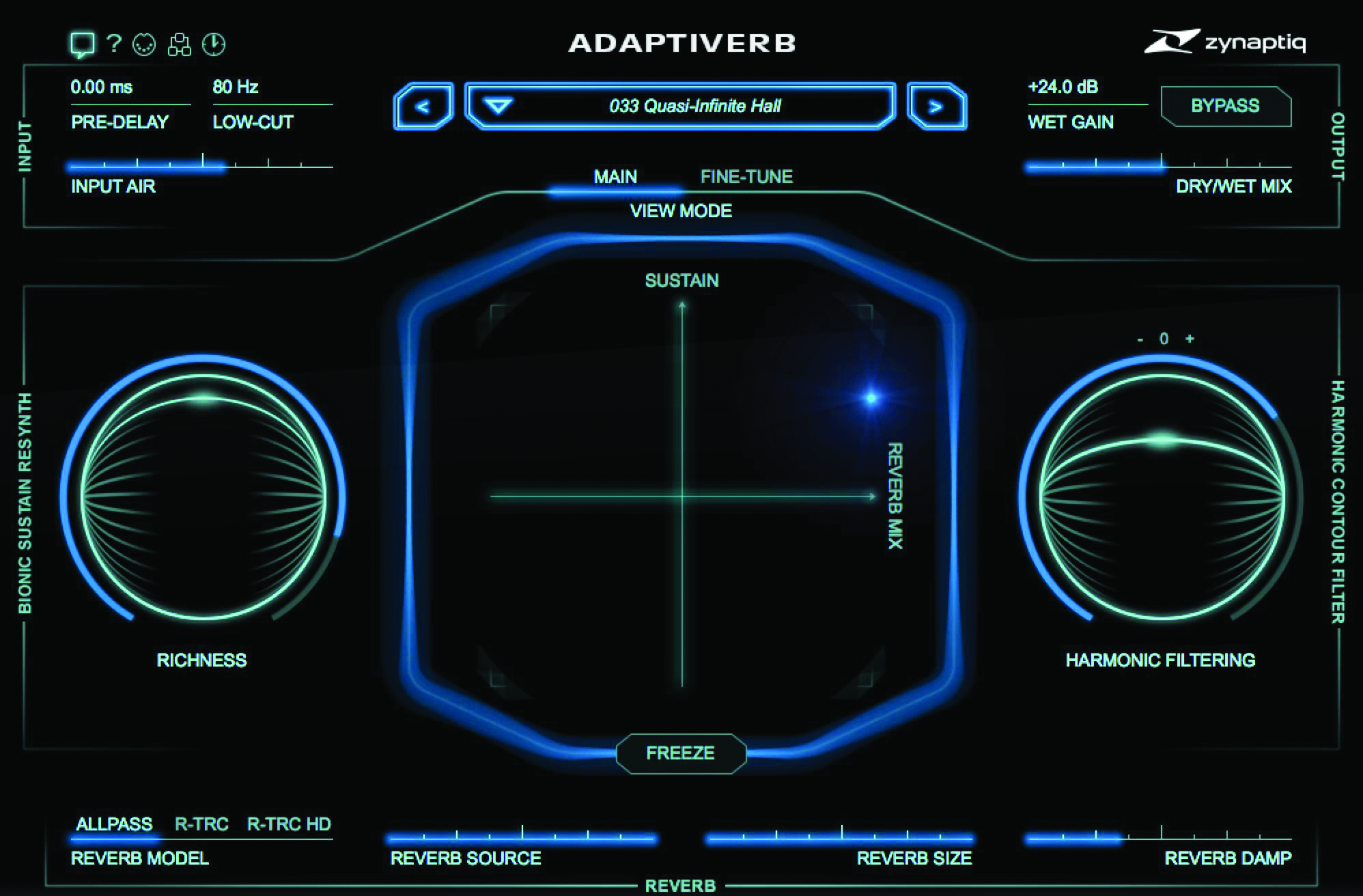

5. Zynaptiq Adaptiverb (2016)

Among the first major applications of AI into music mixing plugins, Zynaptiq’s Adaptiverb inspects the source audio and, using the Bionic Sustain resynthesizer, crafts the perfect, bespoke reverb tail, by way of hundreds of networked oscillators. It set a new precedent of underlining the practical benefits of machine learning across production.

6. iZotope's Assistive Audio Technology (2016)

A company that’s embraced the time-saving potential of AI like few others, iZotope’s helpful Assistive Audio Technology is the secret sauce behind such flagship products as audio-enhancing RX and sound perfecting Ozone. By intelligently analysing audio and applying quick fixes, iZotope have probably collectively saved professionals years of precious time in the studio.

Computer Music magazine is the world’s best selling publication dedicated solely to making great music with your Mac or PC computer. Each issue it brings its lucky readers the best in cutting-edge tutorials, need-to-know, expert software reviews and even all the tools you actually need to make great music today, courtesy of our legendary CM Plugin Suite.