A Google research team has created a playable AI synth

The NSynth Super uses machine learning and neural networks to generate sounds

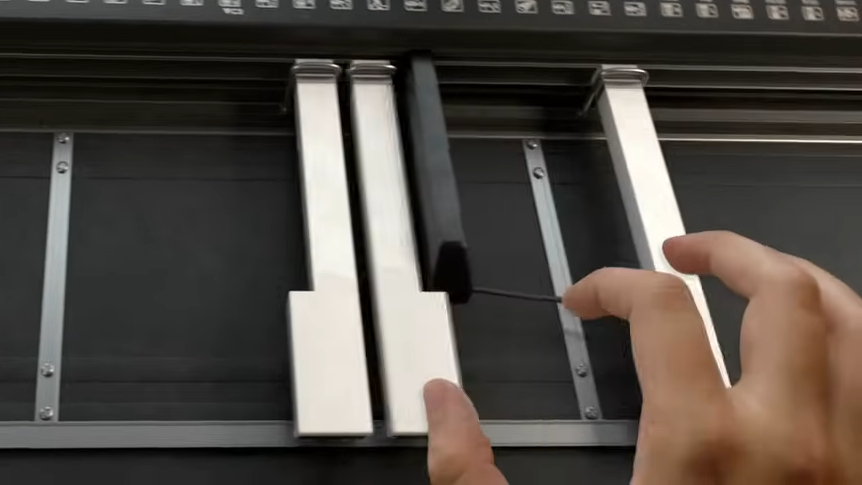

A research team at Google has developed NSynth Super, an experimental open source instrument that uses machine learning and neural networks to generate sounds.

The ongoing Magenta project has been set up to explore how machine learning tools can help people to create art and music in new ways; one of its earlier creations was the NSynth Neural Synthesizer. This uses a deep neural network to learn the characteristics of sounds, and then creates new sounds based on these characteristics.

As part of a bid to make this technology more accessible, the Magenta team has now developed NSynth Super in collaboration with Google Creative Lab. This open source hardware features a touchscreen interface and enables musicians to generate sounds from four different sources.

From 16 original source sounds across 15 pitches, it’s said to be possible to generate more than 100,000 new sounds. Four sound sources are assigned to each of the four dials, and musicians can use these to select the source sounds that they want to explore between. Using the touchscreen, it’s possible to navigate the new sounds that combine the acoustic qualities of the original four.

NSynth Super isn’t a commercial product, but you can download all the source code, schematics and design templates for the prototype on GitHub. Find out more on the NSynth Super website.

Get the MusicRadar Newsletter

Want all the hottest music and gear news, reviews, deals, features and more, direct to your inbox? Sign up here.

I’m the Deputy Editor of MusicRadar, having worked on the site since its launch in 2007. I previously spent eight years working on our sister magazine, Computer Music. I’ve been playing the piano, gigging in bands and failing to finish tracks at home for more than 30 years, 24 of which I’ve also spent writing about music and the ever-changing technology used to make it.

Fantastic (free) plugins and how to use them: Full Bucket FB-3300

"I said, ‘What’s that?!’ He looked at me strange and said, ‘We’re line checking. We’ll be gone in five minutes’. I said, ‘You won’t - meet me in that room in 10 minutes’": How a happy synth accident inspired a US number 1 single for Terence Trent D’Arby