10 ways to use generative AI in your music production

Harness the ever-increasing power of generative AI in your music-making

Artificial intelligence is a phrase that’s becoming increasingly inescapable. As the array of technologies that fall under the term’s umbrella continue to develop, we continue to be baffled, astonished and unsettled in equal measure by each successive demonstration of their capabilities.

Over the past year, the AI-driven tech that’s caught the public’s imagination has been generative; this refers to a type of AI-based system that’s capable of generating text, images, video or sound in response to instructions from the user.

Generative AI models make use of neural networks and machine learning techniques to understand and learn vast quantities of data so that they can then generate new data that’s based on it. This means generative AI-powered tools can hold a conversation, produce photorealistic images, and even create music.

While the extraordinary image-making powers of DALL-E have captivated our social media feeds and ChatGPT has garnered headlines for its ability to do, well, pretty much anything, music-making AI tools haven’t yet had their moment in the mainstream spotlight.

Tools like MusicLM, MusicGen and Riffusion have harnessed the power of generative AI to create music and sound in ways that have never been seen before

That’s not to say they’re not equally powerful, though; tools like MusicLM, MusicGen and Riffusion have harnessed the power of generative AI to create music and sound in ways that have never been seen before. Simply open up one of these in your browser, type in what you want to hear - anything from “chill lo-fi beats” to “free jazz played on the kazoo at 200bpm” - and the technology will do its best to approximate your description in the audio it generates.

It’s all fairly bonkers, but these advanced pieces of software aren’t just mind-boggling demonstrations of AI’s sophistication. They’re also highly useful (and free) tools that musicians and producers can use to facilitate their creative process in a variety of ways. Here, we’re going to run through 10 ways that you can use generative AI in your music-making.

1. Free samples

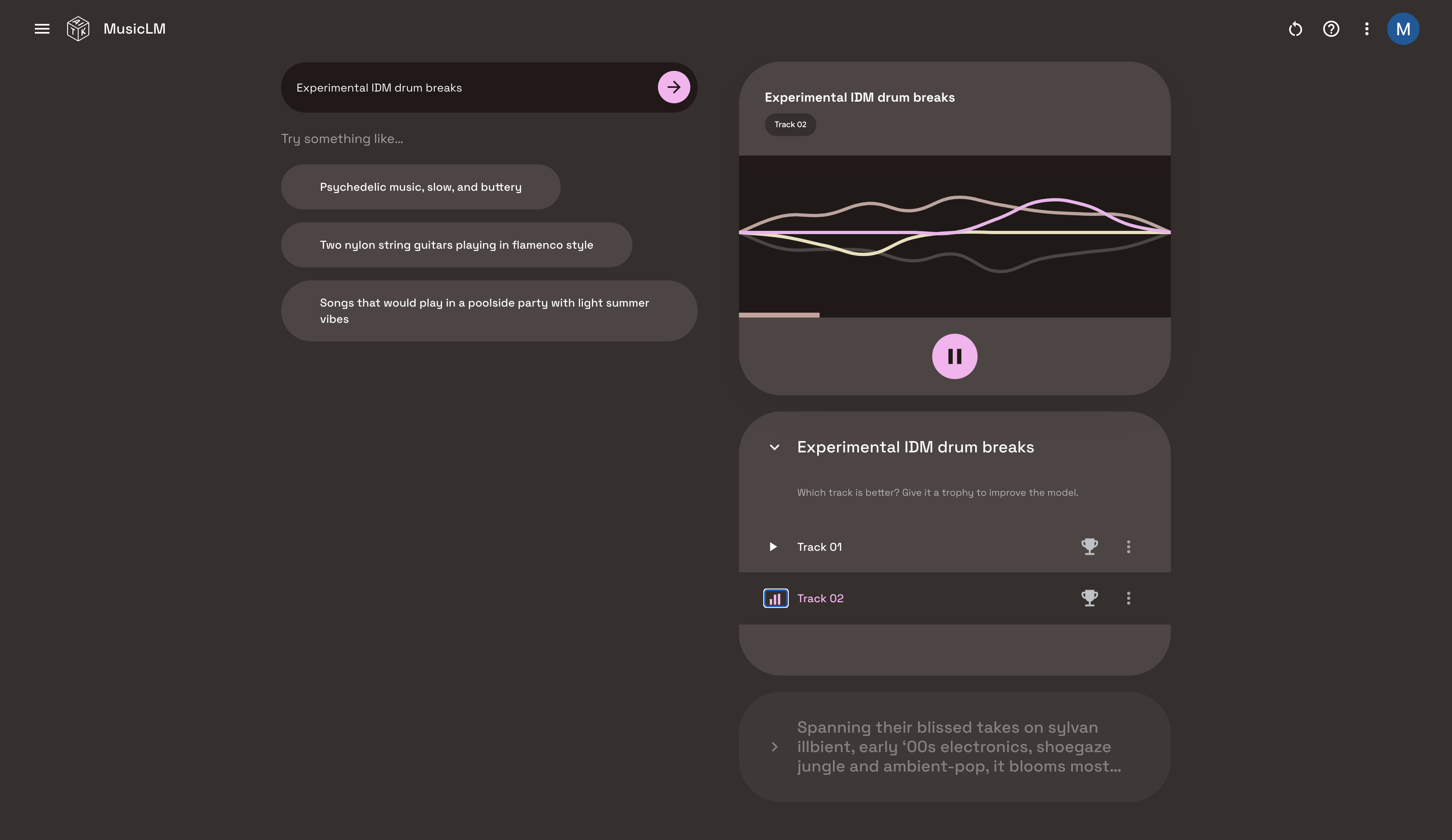

Designed by Google, MusicLM is an experimental, browser-based AI music generator that turns text descriptions into audio. It’s also a hugely powerful tool for electronic music-makers. If you sign up to Google’s AI Test Kitchen, you can join a waitlist to test out MusicLM for free. Once you’re in, just type in what you want to hear and the AI will respond with two 20-second audio clips. These are royalty-free and can be sampled and repurposed in your own productions.

Get the MusicRadar Newsletter

Want all the hottest music and gear news, reviews, deals, features and more, direct to your inbox? Sign up here.

2. Roll the dice

In our experience with MusicLM, the software isn’t great at following specific instructions; if you ask for a “dreamy synth melody at 130bpm in A Major”, that’s not exactly what you’ll get. We had more success in creating interesting sounds when we tried prompts that were more vague and creatively worded.

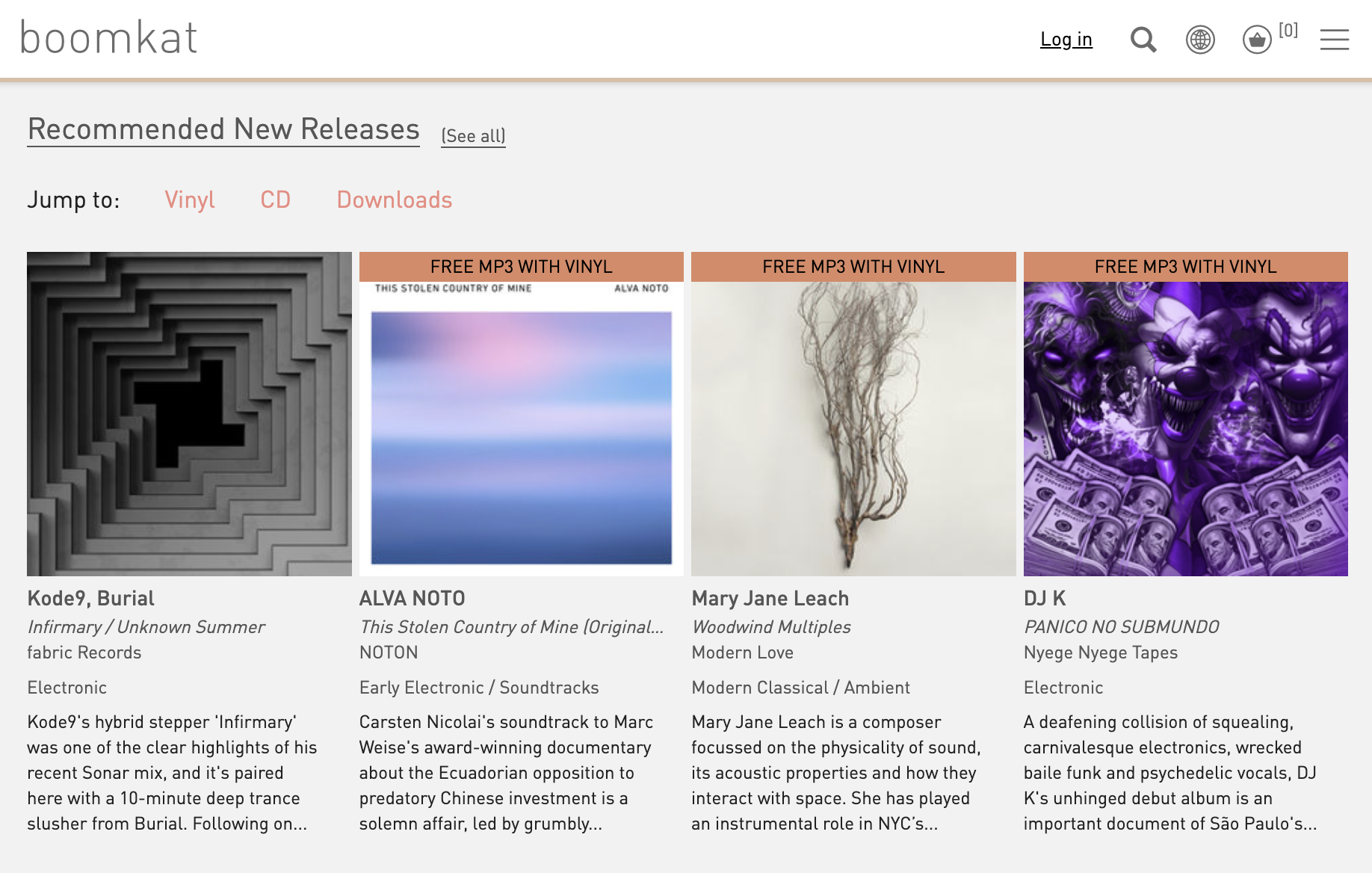

Using flowery descriptions of music lifted from an online record store, we prompted MusicLM to create strange and abstract sounds that worked great as samples to creatively manipulate. Using it in this way, you can think of it as a kind of semi-randomized sample generator.

3. Size up the competition

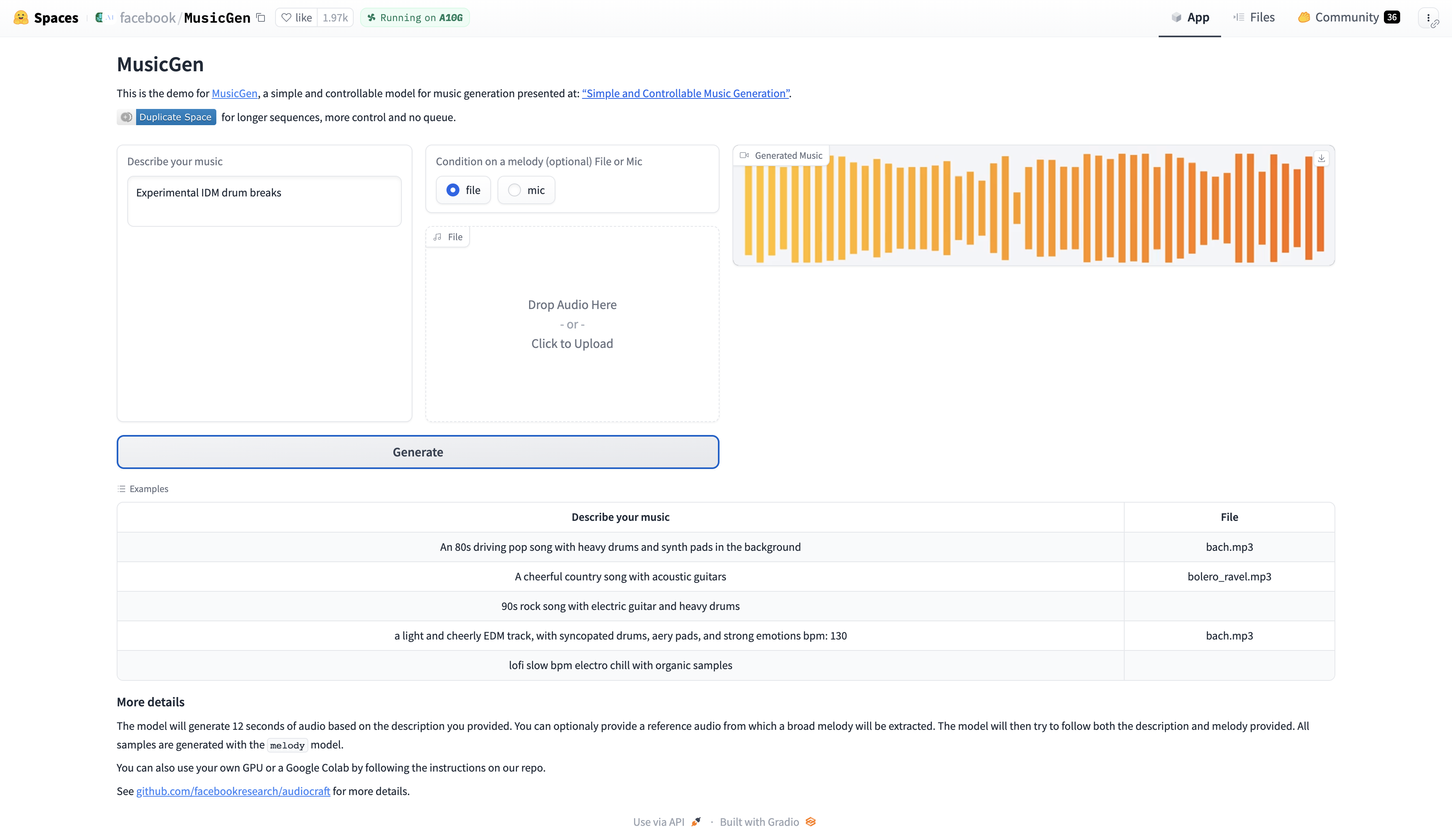

Not to be outdone by their Big Tech rival, Meta (formerly known as Facebook) have released their own AI music generator called MusicGen. It works in a pretty similar fashion to MusicLM, but it’s also capable of recreating melodies specified by the user; simply upload a reference audio file and MusicGen will extract a melody from it and incorporate that into the resulting clip.

We tested a variety of prompts across both and found that in many cases, MusicGen actually beats MusicLM and makes better samples. We managed to cajole it into producing some pretty impressive IDM-esque drum patterns. Give both a try and see which you think is better.

4. Riffing with AI

Riffusion is another free, browser-based AI music generator, but there’s a twist: this one actually works by using the image-generating AI Stable Diffusion to create spectrographs - visual representations of sonic waveforms - which are then converted into sound. Pretty cool, right? The results often sound lo-fi and garbled, but it’s worth testing out. This year, UK producer patten made an entire album using only Riffusion samples; if he can do it, so can you!

5. Make it your own

When using AI-generated samples, just like when you’re using samples from platforms like Splice or Loopmasters, it’s advisable to figure out a way to creatively transform them, thus incorporating them into your music in a way that’s unique to you. This way, your tracks will end up having an original vibe and not just sound like a bunch of pre-made samples pieced together.

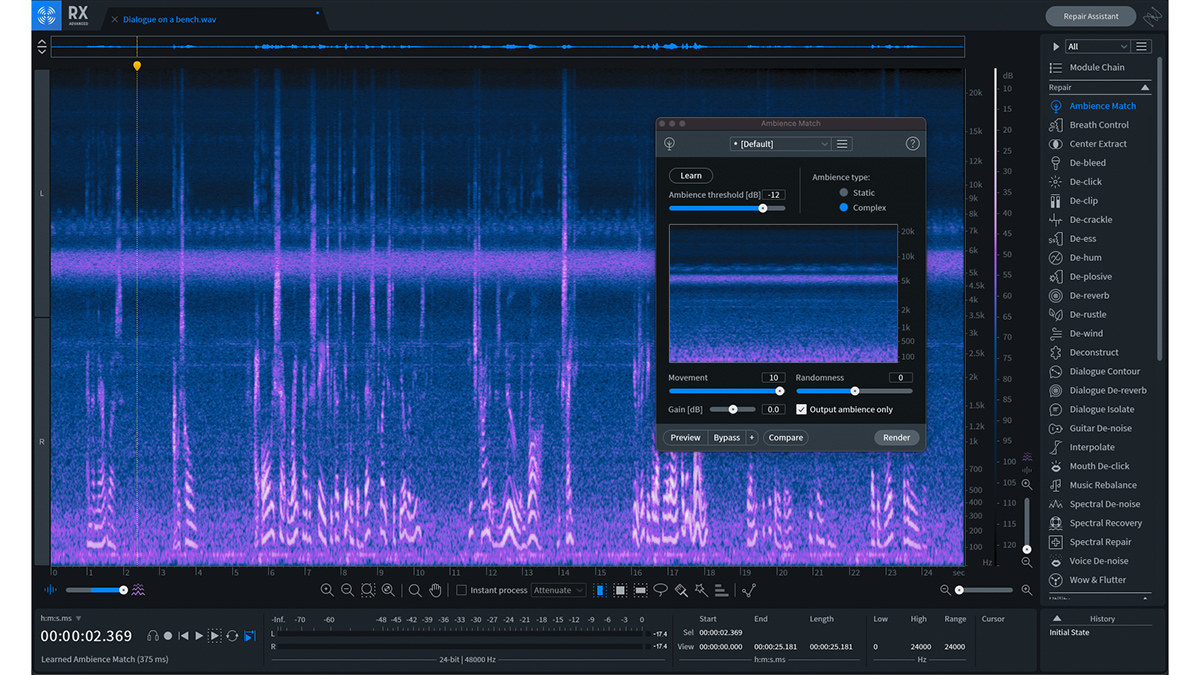

There’s a thousand ways to do this: try chopping them up and rearranging the slices of each sample or processing them with effects. You could even manipulate your AI-generated samples using AI-powered plugins like iZotope's RX 10.

6. Emerging technologies

The tools we’ve covered so far have all been browser-based, but soon enough, generative AI will be living inside your DAW. In fact, it already could be.

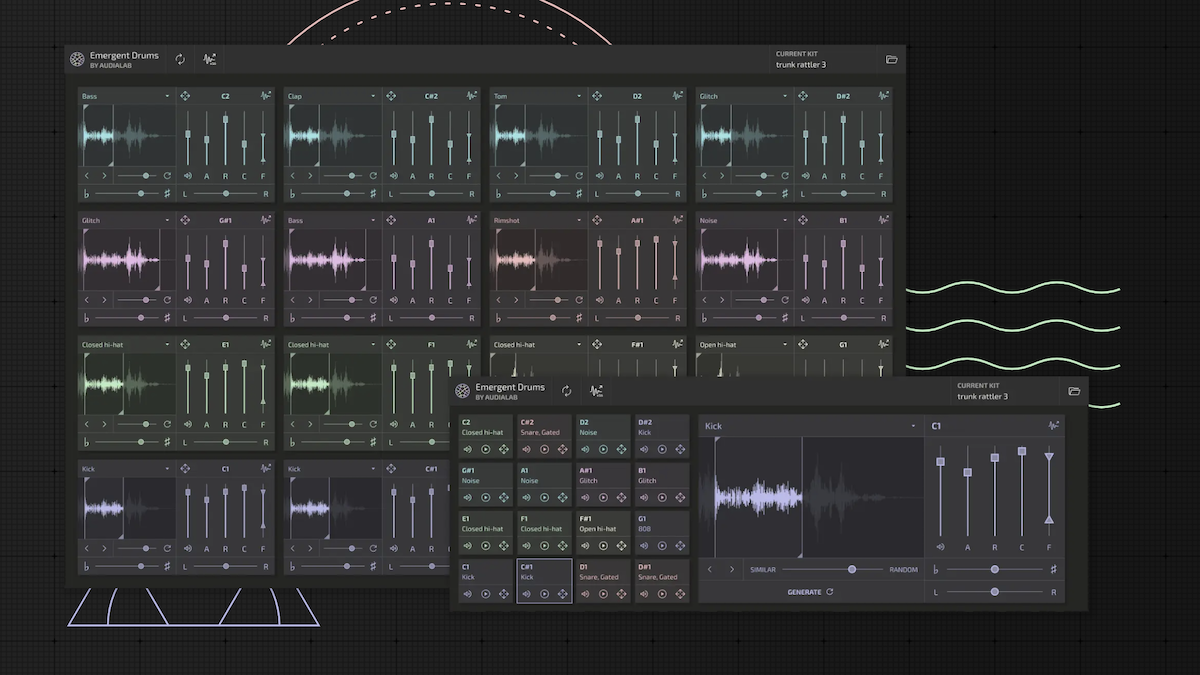

Audialab’s Emergent Drums is the world’s first AI-based drum machine; the plugin doesn’t use any pre-existing samples or synth engines, but instead generates drum sounds using machine learning techniques. It can produce an infinite variety of conventional samples like kicks and snares and it can even generate variations of an existing sample that the user drops in.

7. Find your voice

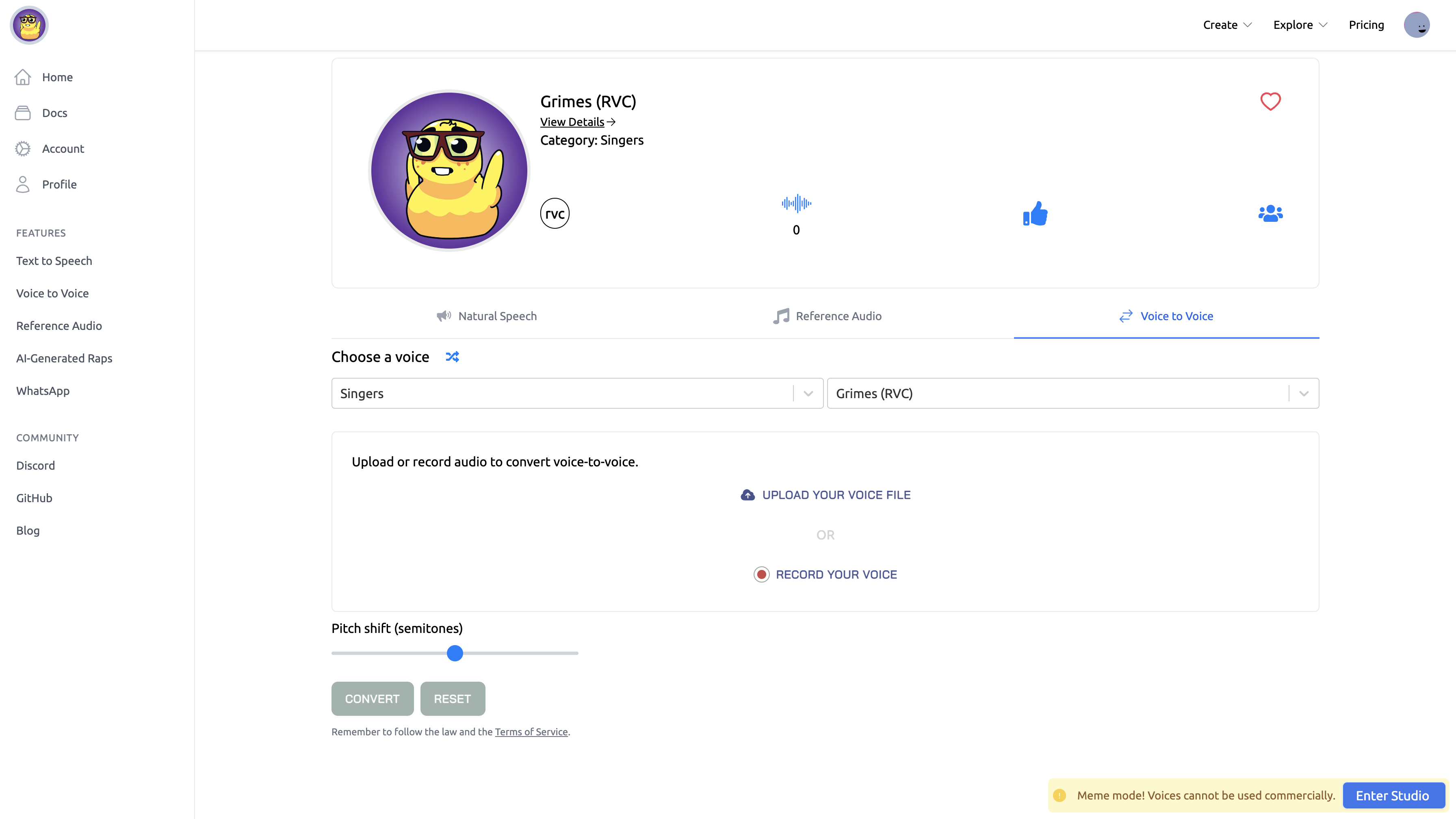

Don’t like the sound of your own voice? Thanks to AI, you can now have someone else’s. AI-powered software is capable of transforming the timbre of any vocal recording to resemble a different voice, including those of famous vocalists. Just search 'AI cover song' on YouTube and you'll see what it's capable of.

Open-source AI models like RVC and So-Vits-SVC can be run on your own computer or accessed through Google Colab notebooks that are easy to find online; check out the AI World and AI Hub Discord channels for more information. If that eludes you, try using websites like Uberduck and Voicify instead. If you’re cloning the voice of an existing artist, we don’t recommend monetizing the music you make, as you may be violating copyright law.

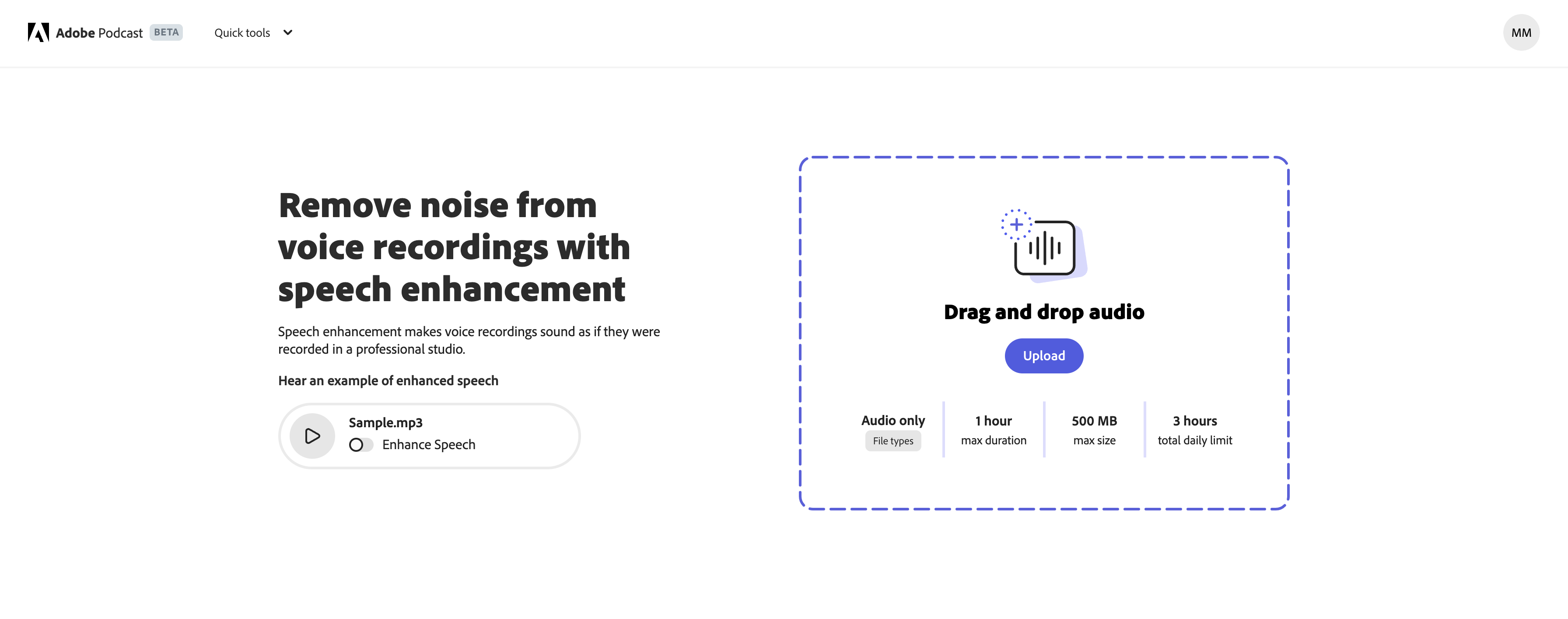

8. Computer, enhance!

Adobe’s website hosts a free AI-powered tool called Enhance Speech. Aimed at podcasters, it uses machine learning to make poor-quality voice recordings sound as if they were recorded in a professional studio. But if you run musical samples through this software, it’ll attempt to transform them into a human voice; this makes strange digital voices out of regular sounds. The results sound fascinatingly weird.

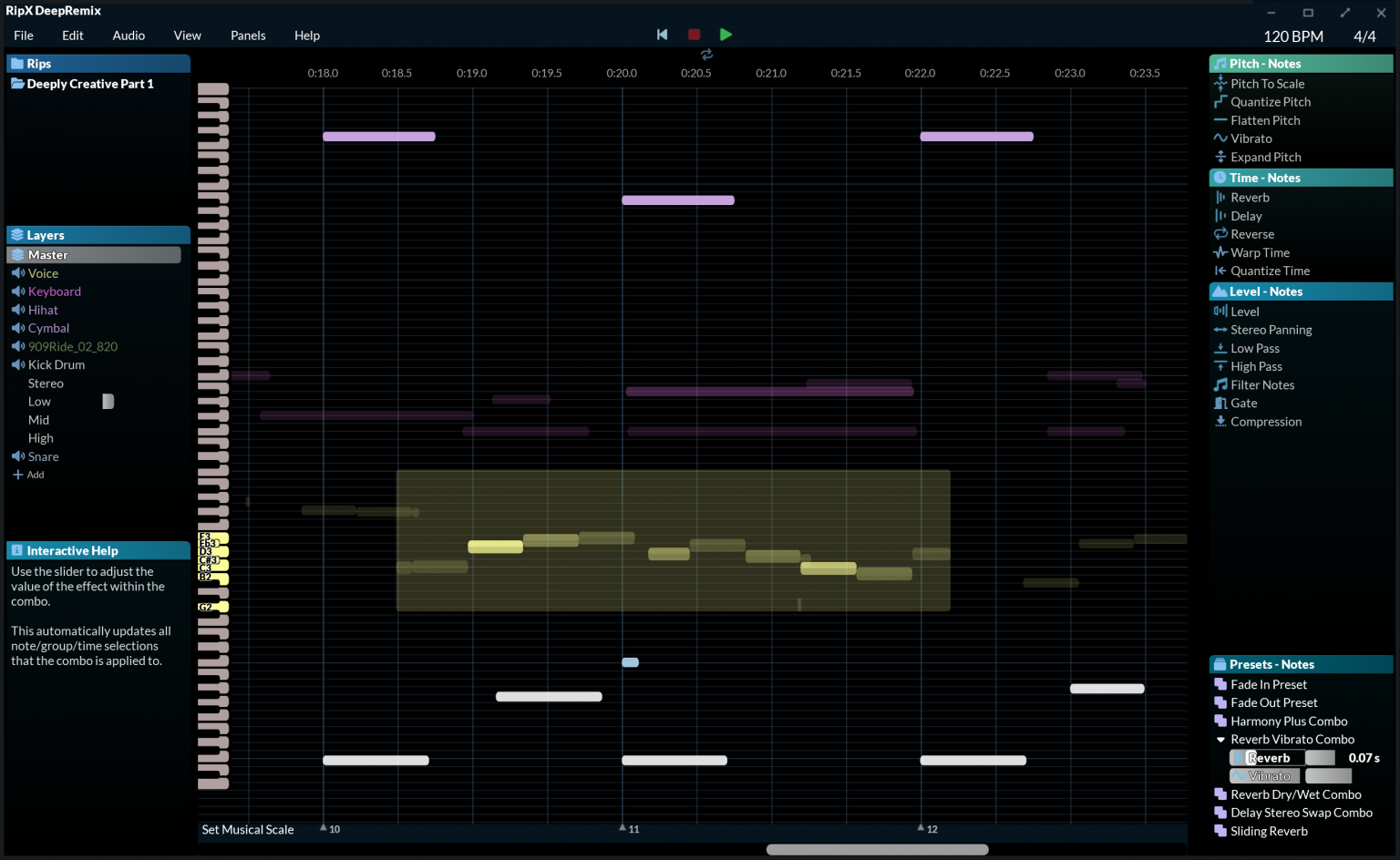

9. Stem the tide

AI has been utilized in stem separation software to create tools that can lift a specific instrument or vocal out of a mixed track. This is hugely useful if you’re trying to sample or remix tracks without access to the original stems. Free online tools like Fadr can be used for this, but there’s also paid-for software available that can do it better.

HitnMix’s RipX separates mixes into stems and lets you treat them like MIDI, pitching individual notes within the recording and even replacing their timbre with other sounds. Like Adobe’s Speech Enhance, these tools can be creatively misused: try using iZotope’s RX 10 to extract an instrumental stem from a recording that isn’t there and it’ll spit out something new entirely.

10. Backchat

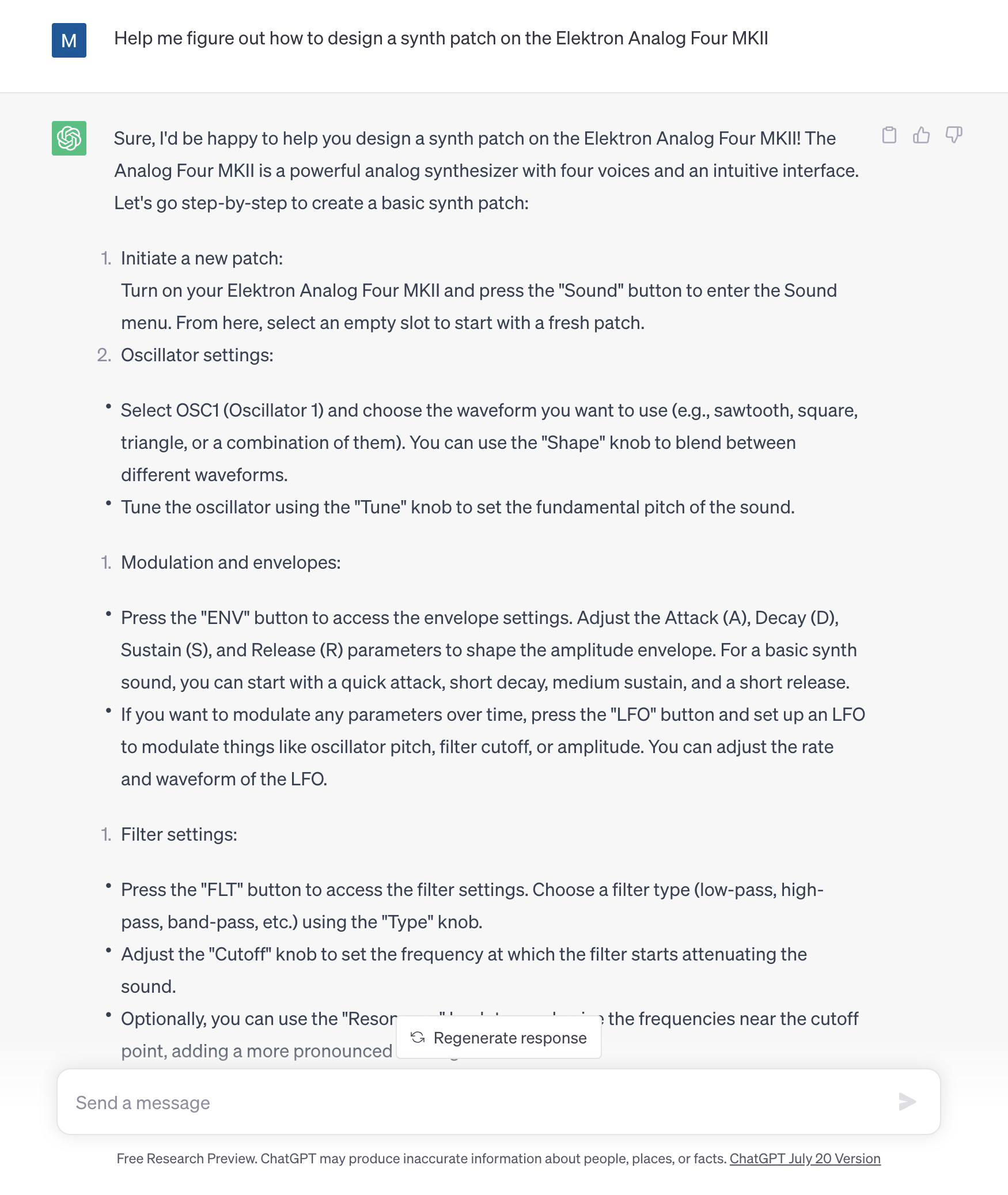

You’ve probably heard of ChatGPT, the AI-powered chatbot that’s been trained on the entirety of the internet. There’s not much it can’t do, and that makes it a useful assistant for music-makers.

You can ask it to write melodies and chord sequences or come up with lyrics, but the results may be a little derivative and won’t win any awards for originality. Rather worryingly for us, though, ChatGPT can even offer technical advice on music production and help you figure out how to use your gear. Do we look concerned?

MusicRadar is the number one website for music-makers of all kinds, be they guitarists, drummers, keyboard players, DJs or producers...

- GEAR: We help musicians find the best gear with top-ranking gear round-ups and high-quality, authoritative reviews by a wide team of highly experienced experts.

- TIPS: We also provide tuition, from bite-sized tips to advanced work-outs and guidance from recognised musicians and stars.

- STARS: We talk to musicians and stars about their creative processes, and the nuts and bolts of their gear and technique. We give fans an insight into the craft of music-making that no other music website can.

“I’m looking forward to breaking it in on stage”: Mustard will be headlining at Coachella tonight with a very exclusive Native Instruments Maschine MK3, and there’s custom yellow Kontrol S49 MIDI keyboard, too

MusicRadar deals of the week: Enjoy a mind-blowing $600 off a full-fat Gibson Les Paul, £500 off Kirk Hammett's Epiphone Greeny, and so much more