Imagine a future with us. Let’s say you’re opening up your DAW, and you’ve got a clear idea of the track you’d like to make.

Firstly, you want to recreate the drum sounds you’ve heard in a classic Aphex Twin tune, but you can’t find the right samples anywhere. You’re hoping to pair those with a type of pad sound that you can’t quite manage to design, but you can imagine vividly in your head. Finally, you have a set of bassline loops that you’re keen on, but you want to explore some new variations on their timbre and style.

Now imagine that you open up a plugin and type in a prompt, like “16 drum samples that sound like the drums in Aphex Twin’s Windowlicker”, and it instantly generates a set of samples that share a resemblance with the song that inspired you. Next, you simply type into the plugin window a description of the pad that you’ve imagined: “pad sound, atmospheric and ethereal, with shimmering high frequencies and a long reverb tail, slightly detuned”.

Just like that, it’s generated a multisample that matches your specifications and is ready to be loaded into a sampler. Finally, you drag and drop the bass samples you liked into the plugin, and it spits out a selection of alternative loops that share the sonic DNA of the original sound. In just a few minutes’ time, with a few brief taps of the keyboard, the sounds in your head just became a reality.

If you haven’t been paying attention to the rapid advancements in AI technology that have taken place over the past few years, this might sound like some kind of sci-fi music production fever dream. The reality is, though, that this type of functionality is just around the corner. In a few years’ time, the process we’ve described above is likely to be readily available to anyone with a DAW. Gone will be the days of combing through Splice, or rummaging around in your external hard drive to find the sound you’re looking for: if you can describe it in words, it can be generated by AI.

Gone will be the days of combing through Splice, or rummaging around in your external hard drive

Audialab is an American company working at the forefront of AI-assisted music production, and their team is currently hard at work developing software that they claim will be able to do everything we just described.

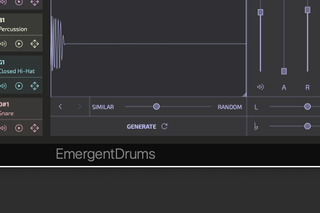

Their first plugin, Emergent Drums, is a VST3/AU drum machine that doesn’t use any pre-existing samples or synthesis engines. Instead, it generates drum samples using technology based on artificial intelligence and machine learning. It’s the first plugin of its kind to bring AI-powered sound generation into the DAW, and the world’s first AI-based drum machine.

Emergent Drums makes use of a neural network that’s been trained on tens of thousands of existing samples. Studying the patterns deep within the raw waveform data of these audio files, the plugin gradually begins to form its own representation of what a kick drum, or a hi-hat, could sound like.

The plugin can produce countless variations on all the usual suspects - kick, snares, claps and hi-hats - along with toms and glitchy, noise-based percussive sounds. The dataset behind Emergent Drums is always growing, and it can even use the sounds it generates to train itself further, using a flywheel method that mirrors the way that humans learn: we act, observe the results, combine that with everything we already knew, and update our understanding accordingly. It's worth noting the plugin doesn't use your computer's hardware to generate sounds - instead, it reaches out to Audialab's servers to retrieve them, and it can't be used offline.

We’ve spent some time with Emergent Drums, and while we’re thoroughly impressed with the vision behind it, we’d say that at present, it isn't a wholesale replacement for your sample library, or your 808. It would, however, make a great companion to it. When we first tested the software, we noted that the majority of the samples we were hearing it produce (especially the kicks) tended to lean towards the crunchy, digitally lo-fi sound that’s typical of AI-powered sound generators. Depending on your preferred style, that's not necessarily a bad thing - they would fit nicely inside a glitch/IDM track, for example.

While the previous generation of AI-based music production tools has helped us to fine-tune existing sounds, the next generation is enabling us to invent entirely new ones

Since then, Audialab have updated the plugin to include a new model, named Creamy (as opposed to Crunchy, the fittingly named original) which is geared towards producing smoother, cleaner samples. The improvement’s noticeable, and while many of the sounds we created wouldn’t pass for acoustic drums recorded with a microphone, they stand up well to synthesised drums and certainly make for interesting samples that could be useful for a number of applications. The plugin excels at producing punchy claps and rimshots, along with gritty percs and hi-hats. It also comes out with some unusual glitchy, noise-based sounds that we found intriguing, and could work well in a more experimental context.

Of course, Emergent Drums isn’t the first plugin to make use of artificial intelligence. We’ve seen this kind of technology integrated into various music-making tools for several years now: plugins like iZotope’s Neutron 4 use AI to assist with mixing tasks, and Focusrite’s FAST collection integrates intelligent tech into its EQ, compressor and limiter, even using AI to dial in the ideal reverb settings for any sound. The difference is, though, that while the previous generation of AI-based music production tools has helped us to fine-tune existing sounds, the next generation is enabling us to invent entirely new ones.

Audialab’s co-founder Berkeley Malagon is a software engineer that, in his own words, “fell backwards into entrepreneurship”. After beginning his career in game design, he began to teach himself the fundamentals of AI, data science and machine learning, before working on early chatbot technology and generative visual art. All the while, Malagon was dabbling in music production on the side, just as a hobby. His lightbulb moment came when he wondered if it might be possible to apply the neural networks he’d devised for producing visuals to the realm of sound design. “I thought: if I can try that with audio, then would I never have to buy a sample pack again?”, he recalls.

“That was the first question. I figured, well, if this thing can generate square images by training on other square images, what if the square image was of a visual representation of a sound - a spectrogram of a sound. And then I do an inversion back to sound, could that work? That very naive experiment worked well enough to spark Audialab.” The plugin has since evolved significantly, but Malagon tells us that drums are just the beginning, and Audialab are hoping to “usher in a new era of sound design and production”. “We're basically working towards building DALL-E for sound design,” he says.

"When we complete our vision, Audialab will be able to give you every sound you ever need"

“Some of the work that we're doing right now is to expand the repertoire of the plugin beyond drums. So we're currently working on a model that will generate instrument sounds, vocal chops and things like that,” Malagon continues. “We have a tool coming soon, where you'll be able to drag in any sound from your library. So any of your drum samples, you can just drag it in, our AI will analyse it and then give you variations of that specific sound. So you don't have to just take whatever comes from our models, you'd be able to just take the sound that you know you love, and just get 100 variations.”

“You can imagine using this exact technology for foley sound, where you want every footstep in a movie to sound a little bit different. When you have the core sound of a crunchy footstep on grass, but you want each one to have its own character. Our technology can power that. Give us the one sound you have and then we'll give you the hundred variations that make it feel real. When we complete our vision, Audialab will be able to give you every sound you ever need.” It’s a bold statement, but not an unrealistic one, considering the astonishing developments we’ve recently seen arise at the intersection of music and AI.

Audialab’s work is happening alongside a rising wave of AI technology that’s dominated the headlines over the past year. ChatGPT, OpenAI’s intelligent chatbot, is a text-based interface that has, among other things, been used to write lyrics, construct chord sequences and build plugins. The AI image generators DALL-E and Stable Diffusion have stunned audiences with the ability to create high-quality, realistic images based on any given text prompt. Much progress has been made in the sphere of music and sound, too, though musical AI hasn’t yet captured the public’s imagination to the same degree.

The same kind of technological processes that Audialab are using to produce individual drum sounds are being used in to generate complete songs that emerge fully-formed out of the digital ether. In 2020, OpenAI, the company behind ChatGPT, quietly unveiled a platform called Jukebox that generates entire pieces of music in the style of a given artist or genre. Though the results were fascinating from a technical standpoint, they were also crunchy, distractingly lo-fi and musically garbled - there’s no chance they could pass for authentic songs that’d been recorded by the specified artists.

A recent development saw the technology behind AI image generator Stable Diffusion repurposed towards the realm of audio. Using a similar method to Malagon’s early experiments, the creators of Riffusion trained a neural network on spectrograms (visual representations of audio files) so that the model was able to produce new spectrograms in response to text input, which are then converted back into audio. Though it can’t create entire songs, Riffusion is capable of stringing together short loops to produce longer jams that are based on subtle variations of the initial seed.

Just a few weeks ago, Google made the boldest leap in musical AI yet. The technology they unveiled, MusicLM, is an AI-based platform that also generates music as raw audio from text descriptions. Though they haven’t made the model available to the public, like DALL-E and ChatGPT, they have shared a number of examples which they believe shows MusicLM “outperforming previous systems both in audio quality and adherence to the text description”. It’s a fair assessment, and the examples are notably superior to anything we’ve previously heard generated by AI-powered tools.

Perhaps the most fascinating thing about MusicLM is its highly advanced understanding of instructions. A particularly impressive example was generated using the following prompt: “A fusion of reggaeton and electronic dance music, with a spacey, otherworldly sound. Induces the experience of being lost in space, and the music would be designed to evoke a sense of wonder and awe, while being danceable.”

The clip that resulted was an uptempo hybrid of reggaeton and EDM, complete with fizzy synth arpeggios and even a robotic vocal line. In addition to genre and instrumentation, MusicLM can even be asked to emulate a performer’s experience level, evoke the vibe of a specific place through music, or generate melodies based on a tune hummed or whistled by the user.

Though platforms like these are undoubtedly fascinating, unlike Emergent Drums, they’re not a whole lot of use to music-makers just yet. Thus far, the majority of advancements in the realm of music and AI have mostly been directed towards generating music that’s already fully formed, rather than helping producers make their own.

"We're specifically interested in empowering sound designers, and the sound designers that we've talked to are excited about this"

That’s something that Malagon feels strongly about, and he tells us that Audialab is dedicated to protecting and empowering artists. “We're not in the background, working on research to press a button and generate a fully finished song,” he continues. “We're not interested in that. We're specifically interested in empowering sound designers, and the sound designers that we've talked to are excited about this.”

“What they say is, ‘oh, this is great, because I get to spend more time shaping a more developed idea than starting from scratch on different forms of synthesis.’ They spend a lot of time getting to the realm of a drum sound before they start shaping it. They love that they can get straight to the curation, the fine-tuning and shaping of a sound that's already in the realm of what they're looking for anyway.”

We’re not entirely convinced that this is a workflow every sound designer would willingly adopt, and recognize that some may be justifiably concerned about how technology like this could impact their livelihoods in the future. There’s always going to be a place for those who can create sounds from scratch, and it’s clear that the value of tools like Emergent Drums lies in their ability to augment this process - or provide the uninitiated with an alternative - rather than make it redundant.

Reflecting on the phenomenal advancements we’re seeing in AI technology, it’s not hard to get carried away in imagining what kind of music-making tools could be dreamed up in the years to come. How about a synth that’s hooked up to a neural network, capable of generating customised patches based on text descriptions? Or a sequencer that's been trained on your Spotify library and can generate melodies and chord sequences in the style of any given artist?

How about an effects plugin that'll produce an exact replication of the reverb sound from a favourite track that you’ve been struggling to recreate? Or even an AI-powered DAW that watches you produce, learns your typical workflow, then makes helpful suggestions about where to take your next track? In the words of Audialab’s co-founder, “what new things could be possible, when you literally can pluck from infinity something that no one else has ever heard...?”

Creating an AI-generated beat with Emergent Drums

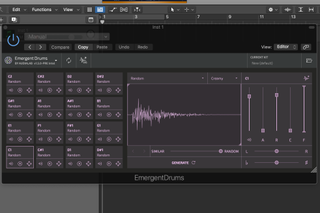

Opening up Emergent Drums, we’re presented with a window that’ll be familiar to anyone that’s used a drum machine before. On the left, we’ve got 16 pads, each of which has buttons to mute, play and drag a given sample to a different pad. On the right hand side, there’s a more detailed view for fine-tuning the selected sample.

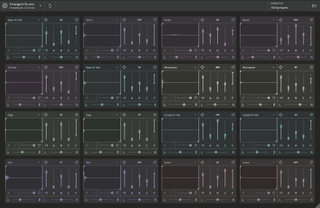

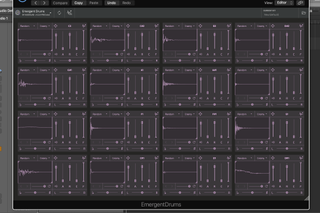

If we hit the main menu in the top left corner, we can change our view mode from Compact to Large, which gives us a detailed view of all 16 samples at once. Within each sample’s window, there are controls for attack, release, gain and a filter. There’s also a pitch control, a pan slider and a visualised waveform with arrows to set the sample’s beginning and end points.

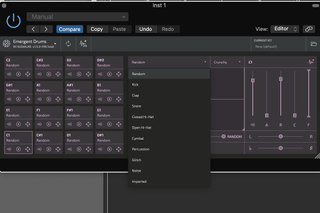

Back in the compact window, at the bottom of the selected sample’s view, there’s a Generate button which enables us to create a new sound. This will use one of two models (Crunchy or Creamy) specified at the top of the window, and we’re able to choose which type of sound we’d like to create from a list that includes kick, snare, hi-hat, glitch, noise and more, including an option for a randomly generated drum sound.

If you’ve found a sound that you’re keen on, but want to explore some variations, you can adjust a slider from Similar to Random, making newly generated sounds become similar but not identical to the original. By dragging sounds to a new pad and generating variations, you could create a whole kit’s worth of variations on one sound.

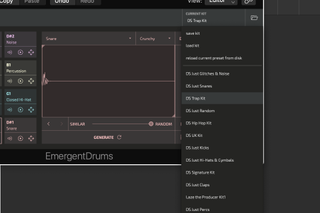

Like a regular drum machine, Emergent Drums is equipped with a number of pre-made kits to explore, and you can load it up with existing samples too. Where’s the fun in that, though? Instead, hit the circular arrows at the top of the plugin window to instantly create new sounds in each drum slot, and start auditioning the results below to build your kit.

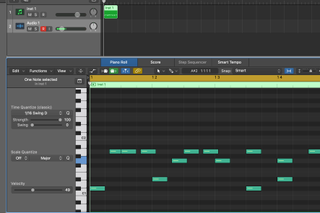

Once you have 16 sounds you’re happy with, you can start programming drum patterns using MIDI, or you can drag the sounds out of Emergent Drums to create audio files to use elsewhere. The plugin has multi-out functionality, so you can route your drums out separately to be processed individually in your DAW. Finally, don’t forget to save the kit you've created.